XplaiNLP Research Group

XplaiNLP: Explainable and Interpretable NLP for Trustworthy and Meaningful Decision Support in High-Stake Domains

At the XplaiNLP research group at the Quality and Usability Lab TU Berlin, we are researching on the future of Intelligent Decision Support Systems (IDSS) by developing AI that is explainable, trustworthy, and human-centered. Our research spans the entire IDSS pipeline, integrating advances in NLP/(M)LLMs, XAI/Interpretability, HCI, and legal analysis to ensure AI-driven decision-making aligns with ethical and societal values.

We focus on high-risk AI applications where human oversight is critical, including disinformation detection, social media analysis, medical data processing, and legal AI systems.

Our Applications: Tackling High-Risk AI Challenges

We adeveloping and deploying AI tools for real-world decision-making scenarios, including:

- Disinformation Detection & Social Media Analysis: Investigating mis- disinformation, hate speech, propaganda and FIMI using advanced NLP, XAI and Interpretability methods.

- Medical Data Processing & Trustworthy AI in Healthcare: Developing AI tools that simplify access to medical information, improve faithfulness and factual consistency in medical text generation, and support clinicians in interpreting AI-generated recommendations.

- Legal & Ethical AI for High-Stakes Domains: Ensuring AI decision support complies with regulatory standards, enhances explainability in legal contexts, and aligns with ethical AI principles.

Through interdisciplinary collaboration, hands-on research, and mentorship, XplaiNLP is at the forefront of shaping AI that is not only powerful but also transparent, fair, and accountable. Our goal is to set new standards for AI-driven decision support, ensuring that these technologies serve society responsibly and effectively.

Research

Advancing Transparent and Trustworthy AI for Decision Support in High-Stakes Domains

Explainability of Large Language Models

Development of explanations (such as post-hoc explanations, causal reasoning, and Chain-of-Thought Prompting) for transparent AI models. Human-centered XAI is prioritized to develop explanations that can be personalized for user needs at different levels of abstraction and detail. Development of methods to verify model faithfulness, ensuring that explanations or predictions accurately reflect the actual internal decision-making process.

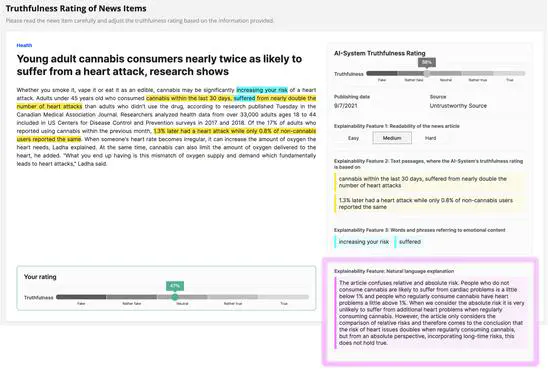

Mis- and Disinformation Detection

Develop and apply LLMs for fake news and hate speech detection. Develop and utilize knowledge bases with known fakes and facts. Utilise RAGs for supporting human fact-checking tasks. Factuality analysis of generated content for summarization or knowledge enrichment.

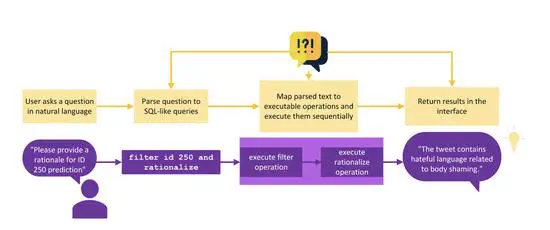

Human Interaction with LLMs

Beyond fine-tuning LLMs for several use cases we also work on human interaction with LLMs to make the results useful for the above-mentioned use cases: Design and validate IDSS for fake news detection. Implementation and validation of human-meaningful eXplanations to improve transparency and trust in the system’s decisions. Legal requirement analysis based on AI Act, DSA, DA, and GDPR to comply with the legal obligations in the IDSS design and LLM implementation.

Medical Data and Privacy

Develop and utilise LLMs for proper anonymization of text-based medical records for open-source publishing. LLM-based text anonymization of text data for various sensitive use cases for open-source publishing.

News

Projects

Running

NEWS-POLYGRAPH

Verification and Extraction of Disinformation Narratives with Individualized Explanations

VERANDA

Trustworthy Anonymization of Sensitive Patient Records for Remote Consultation (VERANDA)

VeraXtract

Verification and Extraction of Disinformation Narratives with Individualized Explanations

fakeXplain

Transparent and meaningful explanations in the context of disinformation detection

PSST

Removing identifying features in speech, improving interactions between devices and cloud services, and creating new ways to assess privacy threats

ORCHESTRA

Orchestrating Reliable, Compliant, and eXplainable Agentic AI Workflows

Under Review

Fake-O-Meter

Multimodaler KI-basierter Desinformations-Assistent für Aufklärung und Resilienz im Umgang mit medialen Desinformationen

Deutsch-Israleische Projektkooperation (DIP)

Adaptive AI for High-Stakes Decision Processes: Balancing Automation and Human Control

Past

ateSDG

Analyzing Sustainability Reports from companies and classifying them according to their contribution to one or multiple SDGs

DFG-project LocTrace

Evaluation of different methods for the monetary evaluation of privacy