Table Understanding and (Multimodal) LLMs: A Cross-Domain Case Study on Scientific vs. Non-Scientific Data

Ekaterina Borisova, Fabio Barth, Nils Feldhus, Raia Abu Ahmad, Malte Ostendorff, Pedro Ortiz Suarez, Georg Rehm, and Sebastian Möller

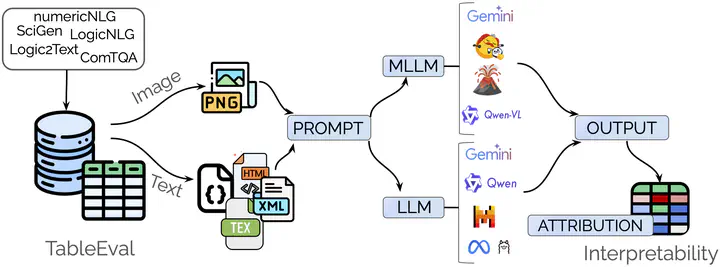

Schematic representation of the main phases in our experiments: 1. Develop TableEval dataset, 2. Evaluate each (M)LLM on individual data subsets from TableEval using various table representations (Image, LaTeX, XML, HTML, Dict), 3. Apply interpretability tools to the output yielding post-hoc feature attributions (e.g., using gradient-based saliency) which signify the importance of each token with respect to the model’s output.

Schematic representation of the main phases in our experiments: 1. Develop TableEval dataset, 2. Evaluate each (M)LLM on individual data subsets from TableEval using various table representations (Image, LaTeX, XML, HTML, Dict), 3. Apply interpretability tools to the output yielding post-hoc feature attributions (e.g., using gradient-based saliency) which signify the importance of each token with respect to the model’s output.Abstract

Tables are among the most widely used tools for representing structured data in research, business, medicine, and education. Although LLMs demonstrate strong performance in downstream tasks, their efficiency in processing tabular data remains underexplored. In this paper, we investigate the effectiveness of both text-based and multimodal LLMs on table understanding tasks through a cross-domain and cross-modality evaluation. Specifically, we compare their performance on tables from scientific vs. non-scientific contexts and examine their robustness on tables represented as images vs. text. Additionally, we conduct an interpretability analysis to measure context usage and input relevance. We also introduce the TableEval benchmark, comprising 3017 tables from scholarly publications, Wikipedia, and financial reports, where each table is provided in five different formats: Image, Dictionary, HTML, XML, and LaTeX. Our findings indicate that while LLMs maintain robustness across table modalities, they face significant challenges when processing scientific tables.