Explainability of Large Language Models

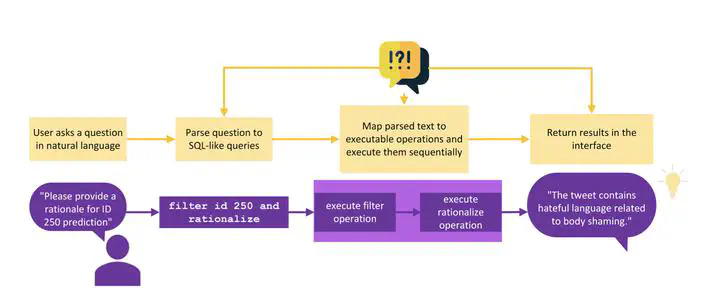

Illustration of how natural language queries from users are parsed into executable operations

Illustration of how natural language queries from users are parsed into executable operationsDevelopment of explanations (such as post-hoc explanations, causal reasoning, and Chain-of-Thought Prompting) for transparent AI models. Human-centered XAI is prioritized to develop explanations that can be personalized for user needs at different levels of abstraction and detail. Development of methods to verify model faithfulness, ensuring that explanations or predictions accurately reflect the actual internal decision-making process.

Wang, Qianli, et al. (2024) “Cross-Refine: Improving Natural Language Explanation Generation by Learning in Tandem.” arXiv:2409.07123

Villa-Arenas, Luis Felipe, et al. (2024) “Anchored Alignment for Self-Explanations Enhancement.” arXiv:2410.13216

Wang, Qianli, et al. (2025) “FitCF: A Framework for Automatic Feature Importance-guided Counterfactual Example Generation.” arXiv:2501.00777

Feldhus, Nils, et al. (2024) “Towards Modeling and Evaluating Instructional Explanations in Teacher-Student Dialogues.” GoodIT ‘24

Wehner, Nikolas Feldhus, Nils, et al. (2024) “QoEXplainer: Mediating Explainable Quality of Experience Models with Large Language Models.” QoMEX 2024 Demos / ACM IMX 2024

Wang, Qianli, et al. (2024) “LLMCheckup: Conversational Examination of Large Language Models via Interpretability Tools.” NAACL 2024

Schmitt, Vera, et al. (2024) “The Role of Explainability in Collaborative Human-AI Disinformation Detection.” FAccT 2024

Feldhus, Nils, et al. (2021) “Thermostat: A Large Collection of NLP Model Explanations and Analysis Tools.” EMNLP-System Demonstrations (2021)